Useful information

Prime News delivers timely, accurate news and insights on global events, politics, business, and technology

Useful information

Prime News delivers timely, accurate news and insights on global events, politics, business, and technology

In its own GTC AI show in San José, California, earlier this month, Graphics-Chip Maker Nvidia He presented a large number of associations and ads for its products and generative platforms of AI. At the same time, in San Francisco, Nvidia remained behind the closed doors along with the Game developer conference To show games creators and the media how their generative technology could increase the video games of the future.

Last year, Nvidia’s GDC 2024 showcase had practical demonstrations in which I could talk to non-playable characters, or NPCS, in pseudo-conversations. They responded to the things I wrote, with reasonably contextual responses (although not as natural as script). AI also radically modernized old games for a contemporary graphic aspect.

This year, in GDC 2025, Nvidia once again invited the members of the industry and pressed a hotel room near the Moscone Center, where the convention was held. In a large room full of computer full of GPUs from GeForce 5070, 5080 and 5090, the company showed more ways in which players could see the generative AI remastering old games, offering new options for animators and evolving NPC interactions.

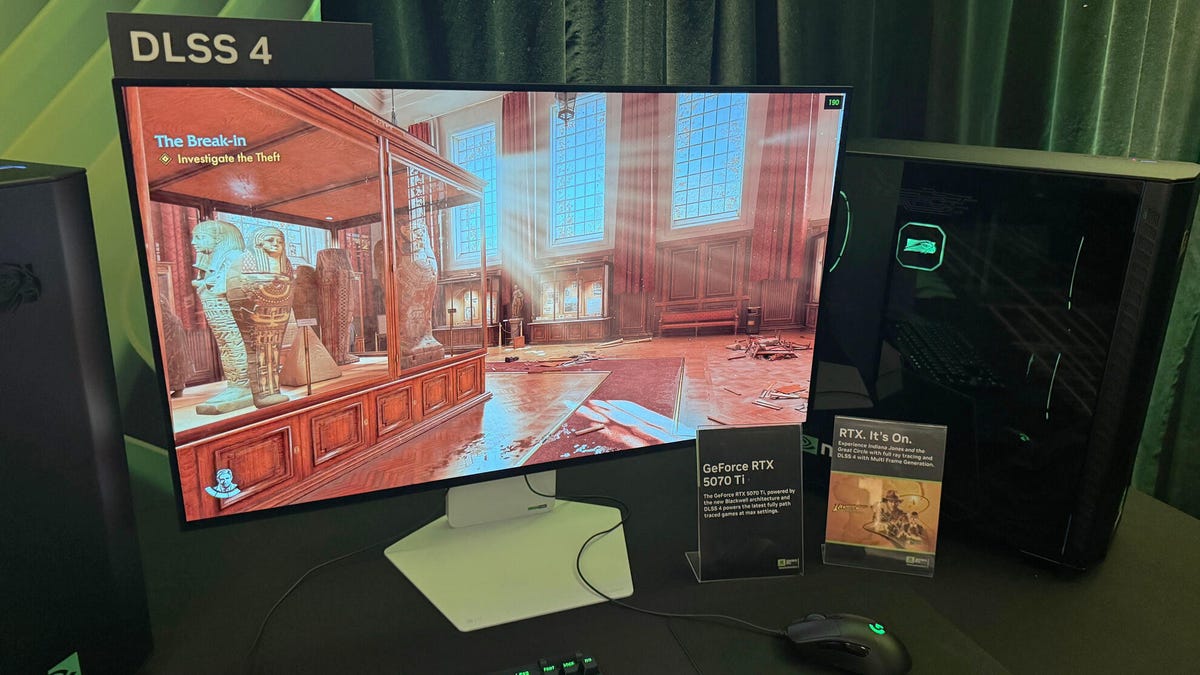

Nvidia also demonstrated how his latest AI graphics technology representing technology, DLSS 4 for its GPU line, improves the quality of the image, the light route and those framed in the modern games, characteristics that affect the players every day, although these Nvidia efforts are more conventional than their other experiments. While some of these advances depend on studies to implement a new technology in their games, others are available at this time for players to try.

Nvidia detailed a new tool that generates animations of characters from characters based on text indications, something like that if you could use chatgpt in Imovie so that the characters in your game move in action with script. The objective? Save developers. The use of the tool could convert the programming of a sequence of several hours into a task of several minutes.

The body movement, as the tool is called, can be connected to many digital content creation platforms; The senior manager of Nvidia products, John Malaska, who directed my demonstration, used Autodesk Maya. To start the demonstration, Malaska established a sample situation in which he wanted a character to jump on a box, land and advance. In the timeline of the scene, he selected the moment for each of those three actions and wrote text indications so that the software generates the animation. So it was time to play.

To refine his animation, he used the movement of the body to generate four different variations of the character jump and chose the one he wanted. (All animations are generated from licensed movement capture data, said Malaska). Then he specified where exactly wanted the character to land, and then selected where he wanted them to end. The body movement simulated all frames between the carefully selected movement dynamometric points, and the boom: animation segment achieved.

In the next section of the demonstration, Malaska had the same character walking through a source to reach a stairs game. I could edit with text indications and timeline markers for the character to sneak and elude the patio accessories.

“We are excited about this,” said Malaaska. “It will really help people accelerate and accelerate workflows.”

He pointed out situations in which a developer can obtain an animation, but wants it to work slightly differently and send it back to the animators for editions. A much more slow scenario would be if the animations had been based on the real movement capture, and if the game required such loyalty, make Mocap actors return to the registry could take days or months. Adjusting animations with body motion based on a movement capture data library can avoid all that.

It would be negligent of not worrying about movement capture artists and if the body movement could be used to avoid their work in part or in its entirety. Generally, this tool could be used to make animatics and practically sequences of graphic scripts before attracting professional artists to capture the movement of scenes finished. But like any tool, everything depends on who is using it.

Body Motion is scheduled to be launched later in 2025 under the NVIDIA Enterprise license.

In the GDC last year, I had seen a Half-Life 2 remastering with the Nvidia platform for Modders, REMIX RTXwhich is destined to give new life to old games. The last stab of Nvidia in the classic Relving Valve game was released to the public as a free demonstration, which players can Download in Steam To see for themselves. What I saw of her in Nvidia’s press room was, ultimately, a technological demonstration (and not the complete game), but still shows what RTX Remix can do to update old games to meet modern graphic expectations.

The RTX Remix Life 2 demonstration of last year was about seeing how many flat wall textures could be updated with depth effects to, for example, make them look cobbled, and that is also present here. When looking at a wall, “the bricks seem to excel because they use the parallage occlusion mapping,” said Nyle Usmani, Senior Product Manager of RTX Remix, who directed the demonstration. But this year’s demonstration was more about the lighting interaction, even to the point of simulating the shadow that passes through the glass covered by the dial of a gas meter.

Usmani guided me through all the effects of lighting and fire, which modernized some of the most disturbing parts of Half-Life 2’s Caunten Ravenholm. But the most striking application was in an area where the iconic enemies of the head attacked, when Usmani stopped and pointed out how the background light was leaked through the fleshy parts of the pseudozombies grottescos, which made them shine a translucent red, very similar to what happens when you put a finger in front of a flashlight. Coinciding with GDC, Nvidia launched this effect, called subsurface dispersion, in a software development kit so that game developers can start using it.

RTX Remix has other tricks that Usmani pointed out, as a new neuronal shador for the latest version of the platform, the demonstration of Half-Life 2. Essentially, he explained, a group of neural networks trains live in the game data while playing, and adapts indirect lighting to what the player sees, causing the areas to be fiee more as they would be in real life. In an example, it changed between old and new RTX Remix versions, showing, in the new version, the light leaked correctly through the broken beams of a garage. Better yet, he hit the frames per second to 100, compared to 87.

“Traditionally, we would track a ray and bounce it many times to illuminate a room,” said Usmani. “Now we trace a ray and bounce it only two or three times and then we finish it, and the AI infers a multitude of rebounds later. In sufficient frames, it is almost as if it were calculating an infinite amount of rebounds, so we can obtain more precision because it is tracing less rays (and obtaining) more performance.”

Even so, I was seeing the demonstration in a RTX 5070 GPU, which is sold for $ 550, and the demonstration requires at least one RTX 3060 TI, so that owners of major graphics cards that have no luck. “That is purely because the route tracking is very expensive, I mean, it is the future, basically the avant -garde, and it is the most advanced track tracking,” said Usmani.

Last year’s NPC AI station showed how non -players can respond uniquely to the player, but this year’s Nvidia Ace technology showed how players can suggest new thoughts for NPCs that will change their behavior and lives around them.

The GPU manufacturer showed that the technology plugged into Inzoi, a game similar to Sims where players take care of NPC with their own behaviors. But with an upcoming update, players can alternate on Smart Zoi, which uses Nvidia Ace to insert thoughts directly into the minds of the zois (characters) that supervise … and then see them react accordingly. These thoughts cannot go against their own features, explained the Marketing analyst of Nvidia Geforce Tech Wynne Riawan, so they will send the zoi in directions that make sense.

“Then, encouraging them, for example, ‘I want people’s day to feel better,” he will encourage them to talk more zois around them, “said Riawan.” Trying is the key word: they still fail. They are like humans. “

Riawan inserted a thought on the head of the Zoi: “What happens if I am only an AI in a simulation?” Poor Zoi was scared, but still ran to the public bath to brush his teeth, which fits his features of, apparently, really being in dental hygiene.

These NPC actions that follow the thoughts inserted by the player are driven by a small language model with half a billion parameters (large language models can go from 1 billion to more than 30 billion parameters, and more give more opportunities for nuanced responses). The one used in the game is based on the 8 billion parameters Mistral Nemo Minitron reduced model to be used by older and less powerful GPUs.

“We crushing the model to a smaller model to make it accessible to more people,” Riawan said.

The NVIDIA Ace Tech is executed on the device using computer GPU: Krafton, the editor behind Inzoi, recommends a minimal GPU specification of a NVIDIA RTX 3060 with 8 GB of virtual memory to use this function, Riawan said. Krafton gave Nvidia a “budget” of a VRM gigabyte to ensure that the graphics card has enough resources to render, well, the graphics. Hence the need to minimize the parameters.

Nvidia is still internally discussing how or if unlocking the ability to use larger parameter language models if players have more powerful GPUs. Players can see the difference, since NPCs “react more dynamically as they react better to their surroundings with a larger model,” Riawan said. “At this time, with this, the emphasis is mainly on his thoughts and feelings.”

An early access version of the Smart Zoi function will leave all users for free, as of March 28. Nvidia sees it and Nvidia oil technology as a springboard that one day could lead to truly dynamic NPC.

“If you have MMORPG with Nvidia Ace in it, NPCs will not be stagnant and will continue to repeat the same dialogue: they can be more dynamic and generate your own answers based on your reputation or something. As, hey, you hear, you are a bad person, I don’t want to sell my goods,” said Riawan.

Look at this: All announced in the CES de Nvidia event in 12 minutes