Useful information

Prime News delivers timely, accurate news and insights on global events, politics, business, and technology

Useful information

Prime News delivers timely, accurate news and insights on global events, politics, business, and technology

Do you want smarter ideas in your entrance tray? Register in our weekly newsletters to obtain only what matters to the leaders of AI, data and business security. Subscribe now

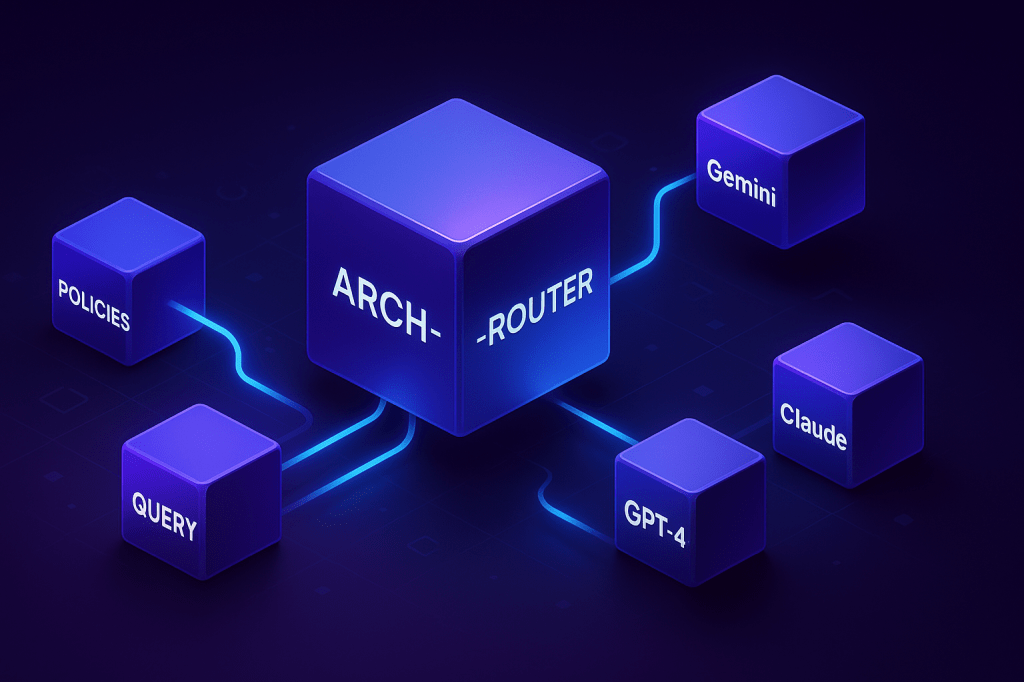

Researchers from We Katan Labs They have introduced BunkerA new routing and framework model designed to intelligently assign user consultations to the most appropriate large language model (LLM).

For companies that build products that are based on multiple LLM, Arch-Router aims to resolve a key challenge: how to direct consultations to the best model for work without relying on rigid logic or expensive resentment every time something changes.

As the LLM number grows, developers are moving from a single model configurations to multiple models that use the unique strengths of each model for specific tasks (for example, coding generation, text summary or image editing).

The LLM routing has become a key technique to build and implement these systems, acting as a traffic controller that directs each user consultation to the most appropriate model.

The existing routing methods are generally divided into two categories: “task -based routing”, where consultations are routed based on predefined tasks and “performance -based routing”, which seeks an optimal balance between cost and performance.

However, tasks based on tasks fight with intentions of unclear or changing users, particularly in multiple conversations. Routing based on performance, on the other hand, rigidly prioritizes reference scores, often neglects preferences of real world users and adapts badly to new models unless it suffers expensive adjustment.

More fundamentally, as Katanemo Labs researchers point out in their paper“Existing routing approaches have limitations in the use of real world. Usually, they optimize the reference performance while neglecting human preferences driven by subjective evaluation criteria.”

The researchers highlight the need for routing systems that “align with subjective human preferences, offer more transparency and remain easily adaptable as models and cases of use evolve.”

To address these limitations, the researchers propose a frame of “routing aligned with preference” that coincides with consultations with the routing policies based on user -defined preferences.

In this context, users define their natural language routing policies using a “domain action taxonomy.” This is a two -level hierarchy that reflects how people naturally describe tasks, starting with a general issue (the domain, as “legal” or “finance”) and reducing to a specific task (the action, such as “summary” or “code generation”).

Each of these policies is linked to a favorite model, allowing developers to make routing decisions based on real world needs instead of only reference scores. As the document says, “this taxonomy serves as a mental model to help users define clear and structured routing policies.”

The routing process occurs in two stages. First, a routing model aligned with preferences takes the user’s consultation and the complete policy set and selects the most appropriate policy. Second, a mapping function connects the selected policy to its designated LLM.

Because the model selection logic is separated from politics, models can be added, eliminating or exchanging simply editing routing policies, without the need to train or modify the router itself. This decoupling provides the flexibility required for practical implementations, where models and use cases constantly evolve.

The policy selection works with Arch-Router, a compact parameter language model of 1.5b adjusted for the routing aligned with preferences. Arch-Router receives the user consultation and the complete set of policy descriptions within his message. Then generate the identifier of the best coincidence policy.

Since policies are part of the entrance, the system can adapt to new or modified routes in the inference time through learning in context and without retention. This generative approach allows the goalkeeper to use his previously trained knowledge to understand the semantics of both the consultation and the policies, and process the entire conversation history at the same time.

A common concern with the inclusion of extensive policies in a notice is the potential of greater latency. However, the researchers designed arc arc to be highly efficient. “While the duration of the routing policies can become long, we can easily increase the context window of the enriching arc with a minimum impact on latency,” explains Salman Paracha, co-author of the document and founder/CEO of Katanemo Labs. Points out that latency is mainly drive “Image_Editing” or “Document_Creation”.

To build Arch-Router, the researchers adjusted a 1.5b parameter version of the QWEN 2.5 model in a curing set of 43,000 examples. Then they tested their performance against the latest patented models of Openai, Anthrope and Google in four public data sets designed to evaluate conversational systems.

The results show that the Arc-Router achieves the highest general routing score than 93.17%, surpassing all other models, including the main owners, in an average of 7.71%. The advantage of the model grew with longer conversations, demonstrating its strong ability to track the context in multiple laps.

In practice, this approach is already being applied in several scenarios, according to Paracha. For example, in open source coding tools, developers use Arch-Router to direct different stages of their workflow, such as “code design”, “code understanding” and “code generation”, to the most suitable LLMs for each task. Similarly, companies can enruption of documents creation to a model like Claude 3.7 Sonnet while sending image editing tasks to Gemini 2.5 Pro.

The system is also ideal “for personal assistants in several domains, where users have a diversity of tasks, from the text summary to de factoid consultations,” said Paracha, added that “in those cases, the goalkeeper can help developers unify and improve the general experience of the user.”

This framework is integrated with BowNative Proxy server of Katanemo Labs for agents, which allows developers to implement sophisticated traffic form. For example, by integrating a new LLM, a team can send a small portion of traffic for a specific routing policy to the new model, verify its performance with internal metrics and then completely transitions with confidence. The company is also working to integrate its tools with evaluation platforms to optimize this process for business developers.

Ultimately, the objective is to go beyond the implementations of AI in isolated. “The archery and the arc in a broader way, developers and help companies move from fragmented LLM implementations to a unified and policy-based system,” says Paracha. “In scenarios in which user tasks are diverse, our framework helps to convert that task and the fragmentation of LLM into a unified experience, which makes the final product feel without problems for the end user.”